Most AI Projects Fail in Blue-Collar Environments for One Reason: They Never Touch Reality.

AI doesn’t fail in the boardroom. It fails at 5:45 a.m. in a muddy lot, a noisy plant, a loading dock, or a hospital hallway—where the “system” is still paper, tribal knowledge, and a dozen disconnected tools.

If you want AI to produce real operational outcomes for frontline work—less overtime, lower turnover, fewer safety incidents, less rework—you don’t start with a model. You start with three non-negotiables.

These are the three steps any organization needs to laser focus on to implement real-world AI projects in blue-collar environments.

1) Build the Data Moat: Real-Time Field Data or Nothing Works

The hardest part of “AI for frontline ops” isn’t algorithms. It’s getting reality into a system in the first place.

Historically, field teams used paper, whiteboards, radios, and disconnected point solutions. Even when data existed, it often required dual entry after the fact—someone retyping notes later to make it “usable.” That creates three killer problems:

- Time lag: insights arrive too late to matter

- Accuracy loss: humans summarize, forget, or “clean it up”

- Action gap: nothing triggers in-the-moment decisions

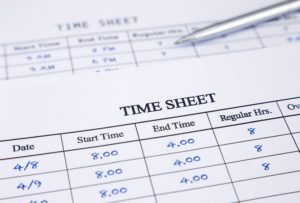

If your data isn’t captured as work happens, AI has nothing to learn from and nothing to act on. A “data moat” in blue-collar environments means the organization can reliably capture the operational truth:

- who worked

- where they worked

- what they did

- what went wrong

- what it cost

- what happened next

Editor’s blunt rule: If it takes a manager “closing the loop later,” your AI project is already behind.

2) Create Context Windows: Data Without Context Produces “Smart-Sounding Wrong”

Even with good data, most organizations hit the next wall: LLMs don’t know your operation.

A general intelligence model can speak fluently, but it won’t automatically understand:

- how your roles, sites, unions, and rules work

- why certain exceptions matter and others don’t

- what “good” looks like for each crew, shift, contract, or location

- what policies and constraints are non-negotiable (safety, compliance, customer SLAs)

So when you ask a generic LLM to “analyze the data,” you often get output that is:

- plausible

- polished

- and less meaningful than it sounds

This is where “context windows” come in.

A context window is the operational frame that tells AI how to interpret the same data differently depending on conditions. It’s the difference between:

- “John worked 12 hours” (data)

and

- “John worked 12 hours because a call-out triggered OT rules at Site B, and the SLA penalty is higher than the OT cost” (context)

To make AI useful in the field, your system must supply the model with the right context at the right moment—things like:

- job type + required qualifications

- site rules + safety constraints

- scheduling policies, labor rules, overtime thresholds

- equipment availability

- customer commitments + penalties

- historical patterns and what changed today

The point: LLMs don’t magically “understand your business.” You have to give them the window.

3) Aim at the Pain You Can Measure: Start Where Small Wins Create Big Value

This is where most companies blow it: they aim AI at “innovation,” not outcomes.

The smartest strategy is boring on purpose:

Start with the problems you already track—and where even a small improvement creates obvious ROI.

If you have excess:

- overtime

- turnover

- rework

- safety incidents

- schedule churn

- time leakage / timecard issues

- compliance exposure

…don’t start with a grand transformation. Start with one measurable target where the math is undeniable.

Use your own metrics and benchmarks to pick the target. But here’s the key: an intelligent system can also guide you toward the best opportunity—because it can compare patterns across sites, shifts, managers, and conditions.

What you’re looking for is the “small lever with a big result,” like:

- reducing OT by a few points

- preventing a small number of avoidable incidents

- cutting rework on the most common failure mode

- improving early retention in the first 30–60 days

- tightening schedule adherence on the highest-cost location

Editor’s rule: If you can’t define “before vs. after” in one sentence, it’s not your first AI project.

The Bottom Line

Blue-collar AI isn’t a “model problem.” It’s an operational reality problem.

If you want real-world AI implementation, focus on these three steps—in this order:

- Data Moat: capture real-time field reality (no dual-entry, no delay)

- Context Windows: give AI the rules, constraints, and situational framing

- Measurable Pain: start where a small change produces clear value

Do this, and AI stops being a demo. It becomes a lever.